Production Outages: How Mindset, Soft Skills & Inner Peace Play a Pivotal Role.

As software engineers, we spend most of our time in making our products and services more operable, reliable, observable, scalable, etc, with one simple goal: make sure the product is highly available and is performing within the SLA. If a defect reaches production, causing an outage, we focus on repair it quickly to lower the TTR (time to resolve). What most of us don't realize is that an outage is more than just keeping the TTR low (yes, TTR is very important); it's also about managing the expectations of the customer. After all, your customers don't expect five-nines reliability from you; they expect five-nines customer service from you. There is a good reason for that. The devices they are using to access your product/service are themselves not very reliable. They are used to downtime and things being a bit flaky. They are fine with it. They will not abandon you for going down; they will leave you though for bad/terrible customer experience.

Outages are also about managing (the expectations of) your boss, your boss's boss; they are also about managing the expectations of the teams that depend on your service to do their jobs. It's a complex situation (analogous to your house on fire), where emotions are high and people's adrenaline is rushing. Gone are the days where the impact is measured in just the revenue lost; although important, the overall impact is much more than a monetary loss. Things like the perception of your brand, customer focus & obsession, reputation, etc, actually matter more in the long run.

Most of us are unfortunately not equipped with the right (soft) skills and the mindset needed to effectively manage an incident/outage. After all, no one teaches us these things in school or in a course. The technical/debugging/problem-solving skills are table stakes, of course. What is often not talked about much is how your mindset, behavior, soft skills, and some common-sense practices play an equally important role in dealing with an outage effectively.

Mindset

First, to do an effective job during the outage, you need to have the right mindset. Based on my experience dealing with outages for more than a decade, here's how I would categorize engineers dealing with an outage (directly or indirectly).

Type A: "Customer-focused"

Engineers who put the customer first have a strong empathy towards their customers. This is something they have developed by using the product themselves (as an actual customer/user) and feeling the pain when something isn't working as expected. They not only display a strong sense of urgency in mitigating the impact but also truly understand the need to keep customers in the loop and informed. It doesn't matter if it is 3 am or 3 pm, their response is the same. They have a strong sense of integrity; meaning, they will do the same thing when no one is watching.

Among many other awesome things, they own their (code) pushes/deployments (assuming their deployments are push-button kind); they take time to understand how their changes impact customers (external and internal). As you all know, it is so frustrating to be in an outage and ask like a million times, "what changed?", and not get any response only to find out the person who made the change isn't even aware (having lunch or went home or doesn't care). What a shame!

Type B: "Boss-focused"

They treat outages like a job (which is not too bad in itself) and doing it because someone higher up is asking them to or cares. The day when those higher-ups stop caring, they stop caring as well. They may have the potential, but not the drive. The incident response is mostly "best-effort" at the best. There is no thought really about the customer. They have a "this-will-eventually-be-resolved" attitude. Too many of them, eventually, the culture hits an inflection point, and that's when things start to go south.

Type C: "The rest"

Mostly neutral folks. Love to coast along. They dread waking up at 3 am; hate being on call; hate dealing with production outages. Hating is OK, but they don't really do anything about it (to try and make things better for everyone). They put up with it either because they are part of a large on-call rotation (only have a painful week once every six months) or having other teams shielding them from the alerts and the pain.

Some want to do the right thing, but don't yet possess most of the soft skills needed. They are junior, shy, and not very assertive. They haven't yet developed the empathy needed to have a type-A mindset; they can be taught and coached on developing it though.

Customer Obsession

What type are you? Ask yourself. If you are the latter two types, do some soul searching and reflection about how you can hack yourself to developing a type A mindset. After all, businesses are all about the customer; if you don't have a customer-focused mindset, then almost always the customers and businesses suffer as a result.

While you are busy analyzing distributed traces, running and analyzing packet captures, looking at a million graphs trying to figure out anomalies, running some commands across thousands of machines, going through all the recent changes pushed to prod, analyzing the last million commits, etc, you also need to be doing the following to keep your customers (may rely on your product for personal or business reasons), stakeholders (who need this information to do their jobs) in the loop and updated.

1) First, send an outage notification. Do it NOW.

This is more important than jumping straight into the problem. This can be counterintuitive for most people. This information --which, only you have-- is needed for the people who depend on it to do their jobs. It is analogous to the series of steps someone takes in response to a clogged toilet at home or work. Before anything else, it is important to put up a sign that this toilet is under maintenance and closed (before attempting to fix the underlying issue), so people don't make things worse by trying to use it. The proactive communication also helps people manage and plan their schedules and activities to work around this.

Also, keeping your boss, and boss' boss in the loop is equally important. This is done for a good reason. Your boss will be unhappy when he/she finds out from his/her boss about an outage. Your boss' boss will be calling your boss first anyway (that is how escalations work).

The notification (email, slack, etc) is especially helpful for large, geo-diverse teams. You will be surprised that some people take this stuff seriously and really appreciate such notifications. Also, important to send periodic updates. This is table stakes.

Also, I strongly prefer to have the collaboration happen over a voice bridge; make sure to set one up asap (in addition to Slack/IRC). Engineers generally don't like this, but it is so important for the real-time (RT) communication to happen, so you don't have to wait for a long time over chat to get a response for questions like "was the rollback completed?", "what's the ETA?", "what is the latest?" etc).

2) PR & Social Media

Hopefully, you know about the outage before your customers. Even if that's not the case, it is critically important to keep your customers, users, and partners in the loop. Develop a SOP that can be followed during outages. Involve your public relations teams, your customer service teams, your social media and community relations managers asap.

If you have JVs (joint ventures), or partners that rely on your service, get them involved asap. For example, Yahoo provides the data for Apple's "Stocks" app on your iPhone. Each time there is an issue with Yahoo finance, we get them involved through one of our POCs.

A side note: not just during an outage, you must constantly be in touch with your customer understanding their needs, getting to know their pain points and just for engaging with them in general. Be sure to monitor Twitter feeds, community forums, and other social media. I once worked with a senior leader who obsessed with our products and customers: he was always using our products; always engaging with our customers. He just knew the pulse of the customer.

3) One Stop (Outage) Shop

When you have thousands of people working at your company, you automatically have thousands of eyes monitoring your products as part of their day-to-day. Leverage them to report and help with outages. It's just a fact of life that we can't always detect issues before our customers/users (great aspirational goal to have though; would love to hear if anybody is truly there). There should be one place people can go to across the company to report outages. It should be so easy as sending an email with the details (I have had great success with this at several places). There was an email alias that was drilled into peoples heads, posted at multiple internal wikis, so people just knew it. People just knew that merely sending a brief email to that alias guaranteed a 15-minute response from the on call. No questions asked. People loved it; they really appreciated it. Remember, just like anything else, the harder you make this, the greater the barrier to entry.

Also, there should one place --that everyone is intimately familiar with-- where people can go, to check the latest status of the outage. Your employees and customers must be familiar with this and go there for the latest. This could be your community forum, your enterprise twitter feed, corp slack channels, IRC, your user voice page, your service status page. Whatever it is, make sure there is one.Make sure it is widely and constantly communicated across the company (trust me, communication is never enough in this context).

A related note: In the ideal world, every team that provides any service is behind an API. Teams talk to each other through an API. There are contracts established between the upstream and downstream. When there is an issue, reaching the on call should be a matter of clicking a link. And, guess what, by the team, you join Slack after being paged for a downstream latency issue, the on call is already there. Their status page is up-to-date so anyone can quickly get up-to-speed. I haven't yet been fully successful in getting here, but dream of getting there one day.

Mitigate Impact First

Of course, mitigating the impact must be your priority (well, along with keeping all the stakeholders in the loop using some of the techniques described above). I am going to cover a few techniques here, which, based on my experience, most people haven't mastered yet, or don't fully realize that there is room for improvement.

1) Show me the impact

You first need to understand the scope. For that, you must have a graph or dashboard that showed the scope of the impact. One of the first questions I'd like to ask when there is an outage is "what is the impact? show me the graph" "How many customers are impacted?" "can you show me the graph that shows the impact? Asking one simple question, What is the impact? show me the graph?

If you don't get good answers to these questions or don't have a quick way to get to the answer, that means you haven't done a good job of instrumenting your application or haven't done a good job of building dashboards that can be used to visualize product heath. So, essentially, you don't have a whole lot of clue about what your customers are experiencing. Bad. Go fix that now.

Answering questions would mean that you are in a better position to strategize your response. It also means that you actually know for real that the impact has been mitigated (as opposed to hoping that the impact was mitigated). Usually, the graphs like RPS or latency should spike back up soon (once the impact has been mitigated) and follow your regular trend.

2) BCP/Failover that colo. (NOW).

I get frustrated when I hear people say, this will be fixed soon, we don't have to BCP out a colo. I am like, we spent all this time, energy, in having our app run in a true multi-colo setup. But, when it's time to do something as simple as a colo failover, we hesitate. Such a shame.

If you are hesitating to fail out a colo, there may be a deeper problem. Bad architecture? BCP not tested? Etc. Evaluate, conduct a postmortem. Get to the bottom of the problem. Invest in training. Introduce "chaos engineering" into your applications. Break things intentionally. Make failures testing and discovery part of your culture; introduce "chaos" early on and embed them into your build/test environment. Write tests. Discover bugs and faults before they reach prod. remember, failing out your colo must be an idempotent operation.

3) Rollback that change. (NOW).

If you are continuously delivering code to production via no-touch deployments using some sort of "metrics based promotion" or a "cluster immune system" (canary, feature flags, slow rollout, blue-green deployments), defects should ideally be caught before they go to prod fully. But, not everyone can get there. If you are pushing manually, using some sort of push-button deployments, then, it is the responsibility of the engineer (not QA, Ops, or release engineers, or whoever) who initiated the push to be around and monitor the push. If things are going south, that change must be rolled back immediately. If you've initiated a prod push, or if your commit is part of a push that batches several commits together and deployed during a certain time of the day, every day (in a continuous fashion), stick around. You own your code pushes. No one else.

Containerized deployments make this so easy. Invest in a proper certification plan ( the heart of CD is the certification plan) for releasing software with the proper CD gates in place.

Rolling back a system to a previous known good state should be a seamless operation. Ditto for rolling forward to a known good state. In other words, these must be idempotent operations. If you are hesitating to do these, ask yourself why. Get to the bottom of the issue.

Sometimes, the change that caused the outage would have been from like weeks ago, perhaps a memory leak or slow Memory/CPU/DiskIO/Network usage build up that reached a tipping point. If this is the case, rolling back recent changes will not help. After you have tried a couple, give up and start looking at the code commits that may be causing the error conditions in prod. This is the time when your sherlock-holming skills come into play. The better of a detective you are, the faster you can get to the root of the problem.

4) Restart that cluster.

(Unfortunately) Restarts and reboots do amazing things. Of course, this is not guaranteed to help. But if your cluster in a state where you have a thundering herd problem, slow start and gradual ramp up should help (some systems need time to warm up). If the underlying problem is not cleared with a restart, it usually means something about the traffic is causing the issue, or it is a code level issue.

For example, you need to give time for your caches to warm up. There are several ways to do this; there are also some pretty innovative things you can do pre-warm your caches, so they are ready.

Same drill here if you are hesitating to do this. Restarts (of your stateless services at least) must be idempotent operations.

Soft Skills

Of course, having the right mindset, customer obsession, and some (cool) techniques to quickly resolve an outage are not enough. You also need to have the right mix of soft skills (people, social, communication, character traits, social/emotional intelligence, etc) to be able to effectively navigate a tricky, complex, high-pressure situation like a production outage.

1) Calm Down

The ability to remain calm under any situation or circumstance is arguably the most important skill of any leader; or of any human being. This can only come from a true sense of inner peace that is cultivated over many, many years. The inner peace gives you the ability to focus on the problem rather than the symptoms; people with inner peace are mindful of the fact that it's not about them or the other person; it's about the problem they are trying to solve. Rest of the skills needed almost always are a derivative of this. Without inner peace, the rest of your performance is always going to be sub-par. It is the foundation of everything else.

I once saw a senior leader join an IRC during a massive outage with 500 people in it (was essentially a mess), he came in and said, "calm down". Those two simple words made an unbelievable impact. There was immediate silence after that; it was only after that that people started to think more clearly and started asking productive questions.

If you have ever called "911", the first thing the operator would say is, "Sir/Ma'am, first, calm down". There is a very good reason for this. The precious moments spent giving details of the emergency clearly (as opposed to too many back and forth) may end up being the reason someone lives or dies. Also, it's pretty rare to see firefighters or police officers who are not always calm, composed, and have a sense of self-assurance. They make us feel secure. The same way, I tend to trust someone who knows what he/she is doing and has a sense of calm.

2) Speak out

The inner peace also gives people the ability to be comfortable asking good questions in a group setting. Most people are not comfortable speaking in a group setting. Perhaps, this, also, has to do with how we have evolved (we did not want to become a tiger's lunch). But, it is important that you are comfortable asking questions in a large group 300-500 people, so you can cut through the noise, bring some clarity and to bring that focus so people work as a team and collaborating effectively to solve the problem.

Also, do not hesitate to call your boss, boss' boss, to keep them in the loop. I can guarantee that upper management would rather be called and woken up as opposed to finding it the next day or an hour later from their boss or the board.

If you have the right people leading an outage, everything will look like a well-coordinated, well-orchestrated effort.

3) Get straight to the point.

Some people have a hard time getting to the point. During an outage, you want people who can communicate clearly and precisely. Ruthless precision is important. When you call, just be ruthlessly precise. For example, just say, 'East Coast Data Center Network outage impacting all properties, please join Slack". Precise communication with a call to action is pivotal.

Also, when you call someone at 3 am, do not apologize. Come straight to the point. Straight. You have already woken someone up; what's the point in apologizing? When I get woken up at 3 am, I don't really care about apologies; I just want to know what is going on and how I can help. The absolute worst calls are the ones that look like this, "Hello, my name is VVV, I am calling from HHHH incident management. First, I would like to apologize for calling you this late. Long pause. Uh. Ah. Are you still there?. Yeah, so, again, sorry....". I am like, "Dude, come to the point; how can I help you?"

This is analogous to getting a call from your child's school. The first thing they say is "Your child is OK". Only then, they start getting into the details. So much more productive.

4) Don't be a jerk; have empathy.

Yes, it's all about the customer, but not at the expense of your employees and their families. Don't come into a room and blast people with questions in the middle of an outage without doing some sort of homework. First, calm down. Learn to trust your team. If you don't trust them; find someone else to do their job. Give them a chance. After all, outages are a team sport and trust is critical. If you find yourself doing this constantly (disappointed with your team's performance, that is), it's good to do a reality check. Do you have the right team? Do you have the right processes? Right leaders? Right stacks/architecture? Remember that people have personal lives; they may be having a bad day at work; they may be in the middle of something important. Respect that.

There was once a leader I worked with who, upon entering the room, everyone became silent, and all the attention was on him. He started asking questions that were already answered. He was such a distraction that it ended up costing up valuable minutes during troubleshooting. This sometimes happens to me as well sometimes when I walk into a war room and people are like acting differently (still working on it). This usually means people are not comfortable with your presence and this is bad. This means you need to spend more time with them and help them with becoming more comfortable.

While on this topic, a side note: don't form a cult around leaders; form a cult around your customers.

5) Never give up.

Mitigating the impact is one thing. Not knowing the real root cause(s) is another thing. I often find so many outages where the root cause is listed as "unknown". Continue to push for the root cause if not found. Otherwise, well, bad karma will come back for you. Infrastructure level outages are notorious for this. They can be obscure, hard to reproduce and debug.

To give an example, there was once a series of partial outages caused by random TOR (top of tack) switches going offline during random times. After blindly rebooting the first few or moving hosts to a different switch, to mitigate the impact, I asked my team to take one offline --but leave it powered on-- for debugging the next time this happened. I also asked the team to snapshot the hosts connected to that switch. During the next few outages, we started to notice a pattern. There was always a host (or two) that in a kernel panic state when this happened. The theory was that the host was sending bogus packets that were filling up the TOR switches' buffers and eventually causing it run out of memory and just freeze (and no longer switch packets). Not all switches were demonstrating this behavior though. Upon doing a diff of all the configs, it was found that only the ones with "flow control" turned on were exhibiting this behavior. When we experimenting with that setting a few months earlier, we had forgotten to turn it off on a few. A firmware upgrade later turned it on by default. I did not stop there. I challenged the team to prove this in a lab setting. And they did. We later added automated tests around to prevent this from ever happening again.

Another example. We were once experiencing a series of DDoS attacks that varied in their patterns, complexity, and volume. Some that looked like an L7 SlowLoris attack was actually the result of an innocuous looking code change made by an intern that appended the IP to a key in Redis instead of creating a new one. At one point, the traffic became got so high that the link was saturated causing requests to slow down. As the requests started to slow down, the web server connections started to fill up, which caused the reverse proxies to max out, ultimately everything slowed down. Essentially, most internal systems were doing lesser work (CPU was way down) as there was not enough processing going on. I knew something was not right; I challenged my team to keep looking. We continued to analyze hundreds of packet captures strategically placed all around the network and there was finally none that revealed that there was an unusual activity to one of the caches (Redis) hosts. Ultimately, that was traced back to a commit from several months earlier. This discovery was critical as it helped us regain our focus on dealing with the real DDoS attacks (these were such a distraction and sidetracked us quite a bit)

6) Can you think on your feet?

Ultimately, as a leader, you must make a call; make it quickly. This is especially true during outages and other emergencies. It is important to take a stand, even though, it may be an unpopular choice. As a leader, if 10 people in a room are saying no, you must have the ability to say yes (assuming it makes sense to you and you strongly believe in it)

For example, back at IMVU, we used a parallel, distributed file system called "MogileFS" to store all our UGC. The master database (MySQL) was where all the metadata was stored (location, file count, etc). Once, someone on my team accidentally dropped a critical table on the master. Unfortunately, during this time, there were backups running against the slave and it was behind by several hours. When I got called about this. The very first thing I did was to ask the on call to make sure the replication (SQL thread) doesn't start on the slave automatically (once the backups resumed). Then, we huddled, set up a war room, and started to work on manually skipping that statement on the slave. I then had to make an executive decision to pause all new uploads until the slave was fully caught up to avoid the risk of data inconsistency issues and potential of data loss. This was done after quickly consulting with the product, upper management, community managers, and other stakeholders across the company, and not reaching consensus on the best path forward. I strongly felt this was the right thing to do. Once the decision was made, we put in a lot of effort in managing our community of millions of users (some of who made money off of us by creating content that they sold on our site). After all, we were telling them that they cannot upload new content for more than ten hours; cannot view content they recently uploaded for several hours. We also gave some credit due to lost revenue from the outage.

7) Can you do a proper hand-off/delegate?

These are hard to do for most people. Sending an email to someone at 3 am (while they are in bed) is not a hand-off; leaving a VM is not a hand-off. You need explicit ACK. Just like TCP, we need the 3-way (or 2-way) handshake to be complete for a hand-off to be complete and valid. You need an ACK for your SYN.

I wasn't always good at this. To give you an example, several years earlier, circa 2009, I was leading an outage that was the result of an EPO (emergency power off; a security guard accidentally pressed that big red button that fully shut off power to all our servers. Thousands of servers). When you get a call from someone on your team that said, "we may have lost all our power in the data center", that is scary. I drove and led that outage. It was a marathon where I, along with several other engineers worked mostly non-stop for almost 36 hours. I said mostly because I did end up taking a power nap of an hour so that I could keep going. But, what I failed to do was that I did not do a good job of explicitly handing off or delegating leadership of the outage to someone else. During that time, people were a bit confused and the efforts were not properly coordinated. What looked like a well-orchestrated effort until then started to look like a s*** show. Upon his return, my boss (who was out on business) told me you did 99% of the things well, except that one hour when there was no leader. It was a hard pill to swallow (I was like, "I did so much and this is what I get as feedback). But I am glad to have gotten that feedback. These are some lessons you just have to learn to grow. Period.

8) Can you run a marathon?

Some outages take hours; some take days; some take weeks (yes, weeks). Be prepared to prepare yourself; firefighting can be long and taxing. Having the type A mindset helps. If you are unable to participate, just say No. Dragging your feet into something doesn't really help anyone. If you are tired, say so, take a break. You will not be received as someone who is not a team player. Keep your family or loved ones in the loop, so they know what to expect from you during this period. Fortunately, I have always had a very supportive wife, who knew the kind of role and industry I am in.

To give you an example, iOS 8, upon its release, had a bug (which Apple wouldn't admit) that caused the background refresh rate to increase by like 10X. Going by the iOS8 adoption rate, we had less than 3 days to add 10X the capacity to be able to serve 1.2 Million RPS (10X more than regular peak). There was no other practical way to avoid a massive outage. We knew we had no choice but to embrace the situation and try hard so our customers did not suffer as a result. That meant managing our time effectively but also managing your family as well. Trust me, the first time (in a marathon like this), it would be hard to deal with, but you will get used to it eventually. Our goal was simple: We can afford to suffer for a few days so our customers don't have to. 30+ engineers across the company put in 15+ hours days over a 3-day weekend. We all also became really good friends along the way.

9) Can you learn? take feedback?

There is always something to learn from every incident. Always. There is no such thing as like a perfect outage. Having a growth mindset helps. People with this mindset worry less about looking smart and put more energy into learning (that's what helps them be humble; that's what helps tame their ego). If you are interested in how to hack your brain to accept critical feedback, I wrote an article on this. Feel free to check it out: Hack Your Brain To Embrace Critical Feedback.

Bring this topic up in the postmortem. Even when people say things like, "this was perfect, there was no way we could have detected this sooner, resolved this faster; no way we could have caught this in canary", challenge those assumptions. If your TTD was 15 minutes, ask, "can it be 5 mins? or 1 min?" Always raise the bar. It's worth it.

And yes, having this mindset not only helps during the outage but also after.

Yay! Outage Mitigated! Well, your job's not done yet!

Clean-up

Sometimes, we hack together a bunch of things to mitigate the impact. Which is fine. But, as a result, things can get messy after an outage has been mitigated. You have worked really hard until now; do not ignore this important step of cleaning up and getting your cluster back to a clean, consistent, convergent state. This is the time to focus on steps that will prevent the immediate recurrence of the same problem. Clean up involves reverting manual changes, failing colos back in, rollbacks, roll forwards, turning features back on, etc. Make sure to bring the whole cluster to a clean, consistent, good state. It is always a good idea to maintain a log of the changes made in response to the outage.

Also, some engineers are notorious for saying things like, "this won't happen again as the engineer who did this has been given the feedback". I am like, "we must be prepared for a future where someone will always be stupid or make operator errors".

The real question here is how much can you trust someone when they say they have completed steps to prevent an outage from happening again for the same underlying root cause -- especially when a large number of teams are involved. My strategy here is, first time I give teams the benefit of the doubt. The second strike, I will start saying things like, "why should I trust you? you said the same thing last time". Again, balance (between trusting someone too much and not trusting them at all) is important here.

Also, communicate to stakeholders that the issue has been mitigated. A good post-outage clean up prevents the second one from happening in the immediate future. A clean up may also involve giving some extra credits to your customer (as explained in an example above). Take time to do this right. Offer your customers things that they deserve regardless of whether you have SLAs or not.

Book-keeping

So easy to neglect this part. If you are serious about customer experience, this step is mandatory. Make sure there is a way to record things like start time, end time, impact (revenue, users, engagement, brand reputation), priority, impacted property, the root cause (or several of them), responsible property, etc (all the usual ITIL stuff). This is critical for reporting, prioritizing reliability (if it makes sense) and surfacing issues with it. This data is critical to be able to add objectivity if you trying to make an argument to prioritize reliability improvements.

Not collecting this data is analogous to not getting feedback on your product, or not listening to your customers. To give you an example, we once shipped a new backend for our message queue system powering our chat app. The system was having a lot of issues early on. But, we painstakingly (yes, this is boring work, but it needs to be done) maintained something called a "service quality" database where details of every outage were recorded and reported. After a few weeks of outages, when we analyzed and presented to the company that the sixty percent of the outages in the past month was caused by changes made to that system, people noticed. As a result, the next few sprints were spent on improving the reliability of that system and the outages dramatically lowered as a result. Not just reliability, the velocity also increased as we had better test coverage, better CD certification plans, PR builds, etc, that actually prevented defects from reaching customers. It's a classic case of slowing down when the shit hits the fan (go over your budgets), investing in reliability improvements, and resume full steam once you are under your budgets again.

Postmortem

Feel like I should write a book on this topic. I have been known to bring about a culture of blameless postmortems and continuous learning at every company I worked for (perhaps from my IMVU / Lean Startup background). After an outage, it is equally important to conduct an immediate postmortem (PM). Ideally within a few days, before the context is lost. Your outage is not complete without this.

Once the PM is complete, make sure to close the loop on remediation items. I love sending weekly reports to all stakeholders about the status of each. I also religiously track these every week and send out reports summarizing the status to all the stakeholders. I always dreamed of ways in which I can systematically track repeat incidents/outages. I.e. outages caused due to the same underlying (root) cause(s). I have gotten close, but, this is somewhat of a tricky thing to do perfectly right as there will be false positives and false negatives.

Also, leverage postmortems to bring about transformational change at scale. Never underestimate their importance. I have done this with some good success. Regarding attendance, people are sometimes notorious for not attending. Sometimes due to lack of context. Give them that. They will start to attend. Make sure everyone who needs to be involved is present.

Some of the best PMs I attended were the ones where there was a lot of passion; lots of disagreement; lots of back and forth on what needs to be done. This usually means, they need more than one follow up. If you conduct a few on the same outage and feel like things are not going anywhere, it means a deeper analysis is needed. Take a break. Rethink your strategy. Sometimes, it is OK to drop a few outage remediations or not agree on everything that was talked about. Remember, the response should be proportional to the impact. For example, if you've lost a million dollars in revenue, it may make sense to deploy an engineer or two F/T for a few months/quarters to make the system more reliable.

I judge the quality of a postmortem by how well the participants were engaged (or disengaged, checking their phones). The deep engagement usually comes from ownership and customer obsession; it can't be faked.

Some Wisdom

1) An Outage is not about you; it's about your customers.

Don't make this personal. This is not about you. Put your ego to the side. Focus on restoring service for the users; focus on making them feel valued even during an outage. You can worry about our egos, hurt feelings, later.

2) Too much passion or perfection (during an outage) is bad

Calm down. Your house is likely not on fire. Yes, your site is down; but, no one is actually dying. It is one of those moments when you need to think clearly and trust others. If you have someone who is saying, "don't make this change, you will make things worse, the site will be down for 5 seconds", it usually means that person has his/her passions wrongly channeled. Or, if you have someone who is absolutely against trying anything that is not very scientific, analytical, or doesn't make a whole lot of sense, it is time to coach them that it is OK to hack things a bit during an outage. The world is not perfect; the software we have written is not perfect. It is OK to jiggle handles sometimes. Don't worry, there will be enough time later to dig deep and understand the real root cause(s).

3) Jiggling handles (during an outage) is not a bad strategy.

Focus on mitigating the impact first. It is OK if you are doing something that doesn't make a lot of sense. When I was at IMVU, we have a cluster restart script that restarted most of the stateless clusters in a controlled manner while slowly enabling features. It was an effective tool to quickly rule out theories that did not work. If it worked, all good. Your customers are happy. Go back and spend as much time as you want on looking at artifacts that can lead you to the root cause (make your tool snapshot any if needed).

4) (During an outage) never ask why or who f**** up.

During an outage, don't ever ask questions that are not productive like, "Why didn't we test backups? Why didn't we test BCP? Why don't we have proper monitoring? "Who made the change? Why did they push in the middle of a live event". Etc. If you are asking these, it shows your lack of empathy, immaturity, and usually, leads to a blame game.

5) (Outage) Fatigue and the "needle in a haystack" problem

People's attention span, focus, and productivity can go down due to things like fatigue, and exhaustion. This is especially true when the outage is more like a marathon (started at 3 am and it's 10 am now and people are tired). This means that the engineers are not operating at their peak productivity and as a result, there is a chance of missing important critical events/signals that are somewhat non-obvious and buried deep within a pile of logs, traces, alerts, graphs. This is true at scale when you are dealing with (tens) of thousands of machines and like a handful of them may still be in a bad state but still serving traffic. So, the impact may be low --in terms of number of users impacted, but there is still impact)

This is bad for the small percentage of users who could still be impacted. This is when proper planning really helps. If someone is fatigued, I rather have them take a break and hand over his/her responsibility to someone else. It also really helps to ask questions like, "Are there any nodes/pods/containers still in a bad state? You sure? Monitoring all green?". What also helps is to democratize critical product health metrics and dashboards, so everyone in the company and all your customers have access to this data in real time and can make informed decisions and can ask questions / raise concerns when things seem off in the graphs.

Remember, even if a single customer (out of a billion) is impacted to a point where the experience is consistently below expectations or your SLA, you, as the on call, must be looking into that. You cannot do this if you are suffering from outage hangover or fatigue.

6) It's not about perfection; it's about progress.

I have never been fully satisfied with the way an outage is run. I always feel like there is room for improvement. I love the culture of continual improvement. This is where a quality postmortem comes helps.

7) Never hide anything (during an outage or otherwise)

If you have f****d up, admit it. I love working with people with high integrity. Such a joy. They understand that emotions are high. Stakes are high. They cooperate and are forthright about things. After all, this is a teamwork and if someone is not pulling their weight or hiding something, that will adversely impact TTR. Embrace failure.

Summary (Tl;dr)

Software engineering is more than just about writing code or operating your product. The best engineers are focused on the customer (internal and external); they are focused on making the lives of others better through the products they build and the services they offer. They have inner peace. They are mindful, self-aware and don't indulge in mindless work. They are inherently mission-driven and find meaning in everything they do (even mundane work). They are all about the problem they are trying to solve. They aren't afraid to admit their failures; they are always learning from their mistakes. They take steps --without being asked-- to prevent a defect from ever reaching production again.

And, yes, the best software engineers aren't afraid to take ownership of their products' and run them; they aren't afraid to be on call for the code they wrote; they don't shy away from learning new skills and build new tools to better understand how customers are experiencing the products they build. They constantly thrive for user feedback and use it in an iterative manner to constantly improve their products.

Are you one of them? Hopefully, this article can get you one step closer to being "that" engineer. Good luck!

Appendix A: Users don't care about Five Nines; neither should you

I tweeted about this some time back. While on the topic of outages, I can't help but talk about how people just mindlessly think they need extreme availability/uptime; like five nines uptime, without taking into account what it would actually take to get there.

There is a reason for this. Most of our devices we see to access a service or product over the internet don't have five nines availability. I mean how often do you have issues with your ISP at home? Your router? Your laptop's wifi? Your cell carrier? Your iPhone? Given this fact, there is no point in trying to make your product more available than any of those devices.

As customers and users, we are always accustomed to a certain amount of downtime with almost everything we use. We just live with it. Of course, too much of downtime is not good. To know what the right level is, you need to truly understand what your tolerance for risk is; what is that your customers really want; how they react to downtimes; etc. Based on that data, you need to make a decision as to how much you can reliably trade-off new features to reliability. A good way to do this is to establish budgets: error budgets; uptime budgets. The formula is simple: Go over? Slow down. Still under? ship....ship...ship.

The reality these days is that you can get to a few nines availability without doing much. Maybe, that is all you need to do. With the democratization of distributed systemsand rise in adoption of container orchestration systems like Kubernetes (K8s) which --among many other things-- give you things like auto-remediation, auto-scaling, etc, natively, you get so many "nines" right out of the box; every "nine" after that is not cheap (certainly not free; can get very expensive). You really need to understand if reliability and performance are your strategic differentiators and make the right choice.

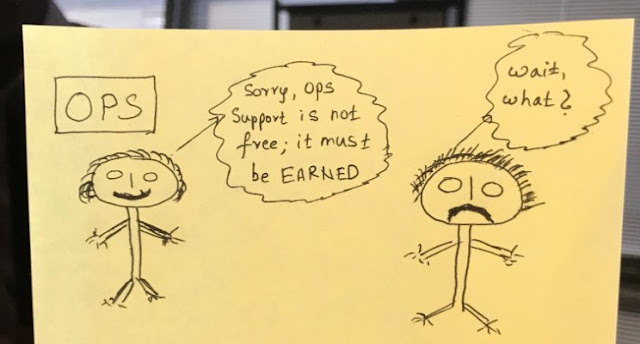

If you are an Ops Engineer and ask product what they care about and if you get the answer, "make sure product is up all the time", it is your responsibility to ask why. Compartmentalizing ops as the sole owners of uptime is so wrong. Uptime is everyone's responsibility; not just Ops'. Well, so is incident/outage management; so are Postmortems; so are outages and incidents. In fact, see "Appendix B" to see where I think Ops and Dev are headed and the shift that is happening across the industry.

Appendix B: Everyone is Ops; everyone is Dev

Ops is moving up the stack

I'd like to close by trying to surface my perspective on this topic (this topic perhaps warrants an article in itself). I actually feel compelled to write one as I've been an Ops guy for more than a decade and have seen so much change in the way software is operated at scale, or the way operations engineering, as a discipline, is evolving, that it is important to tell you all about it, so you can make better, more informed decisions.

Basically, with the rise of Cloud Computing, (Docker) Containers, Immutable Deployments, Kubernetes, Serverless, Istio, Envoy (for service mesh), Microservices, and the general democratization of distributed systems, the infrastructure space is undergoing a major transformation and a (paradigm) shift. Business logic --by itself-- is no longer the differentiator when it comes to creating value and innovating.

With all the abstractions (most of which leak), the operational complexity in running these distributed applications is also increasing proportionally (especially at scale). This is where, typically, the Ops experts come in and add value. They make the economics of scale work. They are experts in Telemetry, Instrumentation, Monitoring, Tracing, Debuggability -- or, Observability, as they call them these days. It appears that Ops is moving up the stack. Ops has come a long way: from racking/stacking servers (back in the late nineties) to tweaking the kernel to running packet traces to config management. These days, Ops folks are focusing on building infrastructure and Container Orchestration; they are focused on building observability stacks. Some are also focused on how apps are built, tested, deployed, provisioned (Test Infrastructure and frameworks). Some are more focused on middleware and the edge/ingress. The core software teams building the mobile apps and the business logic actually need the whole ecosystem to be successful and to be able to rapidly innovate at scale.

Given the paradigm shift, it is even more critical that software developers also learn how to operate their complex distributed system at scale; otherwise, they will become irrelevant sooner than later. If you are a dev team and calling a different team each time you are analyzing a distributed trace, or running a packet capture, or debugging a complex production defect; that strategy will not slow you down, it will also dramatically increase your TTR, and thereby frustrate customers and ultimately hurt your business.

Dev is moving down the stack

In fact, at Yahoo, I was the primary driver behind launching Yahoo's Daily Fantasy back in 2015 in this model (you wrote it; you own/run it). This was a radical departure on how software was traditionally operated at scale. The model was simple: the dev team was responsible for almost all aspects of the development lifecycle: from design to writing code to writing tests to going through multiple architecture senior tech council discussions to paranoids' security reviews. They were also responsible for testing and deploying the code they wrote (using CD). And, finally, operate it and scale it. So, things like monitoring, on call, capacity, postmortems, etc. They are their own Ops.

The Ops teams, on the other hand, helped with the product launch in areas like documentation, understanding of things like DNS, CDN, infrastructure provisioning, monitoring, load balancing. Also, in the understanding of how all the components are interconnected in a complex distributed system. Writing playbooks, how software is deployed in a continuous fashion multiple times a day, how to roll back, etc. But the ops team was mostly hands-off after that. They still provide consulting and expert services periodically and are the go-to POC for things like tracing, monitoring, on call, capacity, infrastructure, etc.

The beauty of such a model is that it encourages ownership, holds people accountable and has led to great positive outcomes. Engineers on that team really care about customers. Just to give an example, there was once an alert when one underage customer was trying to register to play daily fantasy; immediately, an alert triggered (an HTTP 4XX) that went directly to the dev on call, who immediately responded and verified that the system did what it was supposed to do (i.e. did not allow the person to register), then created a ticket to follow up to make the alert more actionable (do not need to wake someone up at 3 am if the alert is not actionable) and went back to bed. Awesome, right? I have been talks on this at several conferences.

Appendix C: "DevOps" is no longer a strategic differentiator.

I don't think "DevOps" is a strategic differentiator anymore. "DevOps" is table stakes these days; it has been so for a while now. A lot of enterprises have been doing things like CI/CD ( a big part of "DevOps") since 2007 or earlier (that is more than a decade ago). If you are one of those still behind, it's time to do some serious catching up, so you can focus on the bigger, better problems.

The strategic differentiator will be how obsessed you are with your customers (both internal and external). The obsession would mean, as an engineer, you would focus on things that matter the most to your customer. That mindset will lead you to develop skills you need the most to be able to win the hearts of your customers (some are described in this article). Ultimately, you will become a well-rounded engineer; one, who not only takes pride in writing clean code of high quality but also someone who is in love with his/her customers.

Anyway, if you have come this far, you have done great. Good job! It means you are serious about your customers.

Good luck!

_____________________________________________________________

If you found this article useful, leave a comment or like it - goes a long way in motivating me to produce more. Thank you!

Comments

Post a Comment