Want to Solve Over-Monitoring and Alert Fatigue? Create the Right Incentives!

Note — I have been giving talks around the world on this topic (SREcon, Velocity, etc). Figured, I can reach more people through an article. Any/all feedback is highly appreciated!

"Research has shown that 72%–99% of ECG monitor alarms are false or clinically insignificant."

Quite a staggering number and quite scary given that lives are at stake. If you have ever been to an ICU, you cannot help but notice the telemetry monitors and their constant beeping (they are such a common sight). In fact, I had the first-hand experience with these monitors in the NICU where my twin boys were being cared for after being born prematurely. I still remember vividly how my wife and I used to freak out every time one of these started to go off. Being an ops guy, I could not help but relate this to being paged while on call. Because I was curious, I did a bit of a research on this topic and found that some Ph.D. students had actually published a paper on this topic in 2013. It talks about how when nurses work among constantly beeping monitors, they may start to ignore the alarms.

Unlike a missed page that said your website or service is down, failing to act on an alarm at a hospital could have much more critical consequences; in fact, in 2010 at a hospital in Massachusetts, a patient's death was directly linked to telemetry monitoring after alarms signalling a critical event went unnoticed by 10 nurses. The Joint Commission has made the task of improving the safety of clinical alarm systems a National Patient Safety Goal for 2014. Also,

"ECRI Institute has placed alarm hazards in first or second place on their Top 10 Health Technology Hazards annual list since 2007."

I attempted to solve this problem when I joined Zynga (back in 2013) as the head of SRE (Site Reliability Engineering). This is my story. Just to refresh your memory, Zynga famously pioneered the concept of a hybrid cloud: new games (like FarmVille) were launched in AWS and brought back in-house to a private could (called "Zcloud") once the traffic patterns became predictable. That too, back in 2010/2011 timeframe - a true pioneer in this space. Pure genius! Additionally, circa 2012, a central platform team was formed to centralize and move more towards an SOA/Microservices architecture. Previously, every product had their own stack and its own cluster. Lots of duplication; lots of monoliths.

In the new architecture, most studios did not really need to provision or manage any infrastructure to build (mobile) games (we used to joke,"there are no servers associated with your mobile game, what are we going to monitor?"). Most of the functionality needed to build (mobile) games was broken down into a service (perhaps a "Microservice", as they call it these days). There were some incredibly smart people at Zynga who envisioned such amazing application architectures (that too, back in 2012); I was lucky to have worked with them.

But, when it came to false alerts/alarms and noise, things were a bit crazy. 100,000+ alerts triggered a month which mostly went to dashboards monitored by a large (50+ person) SRE team distributed across US, Bangalore and the Philippines. The team was primarily responsible for monitoring and incident response; they escalated to the dev teams when they were unable to resolve something on their own. Due to the sheer volume, alerts were color-coded based on priority so the teams could focus on the "highest priority" alerts while ignoring the "less important" ones. For example, a membase related alert (stateful service) was critical and needed immediate attention and was color-coded red; alerts related to stateless services (e.g. java process crashed) were not considered as critical and were color-coded some lighter version of red.

Pretty soon the team ran out of colors (well, almost). Later, when it was time to onboard new alerts related to SOX compliance (as the company went public), the team had no choice except to have the dashboard flash every time there was a SOX alert, so they knew this was not just a P1 but a P0. Additionally, some teams were given priority based on how much revenue or noise they made. In a way, SRE was "best-effort".

As you can imagine, not a great way to run operations at a large scale; we are talking internet scale: 40,000+ servers serving 25+ dev teams; 150+ million MAUs; etc. As a result, service quality suffered. Outages occurred almost every day. SRE response times were in the order of hours. SRE credibility was almost lost. In response, dev teams started to staff their own Ops teams or have devs do ops work (in addition to feature development). But, were obviously struggling with that strategy as well as they were neither too familiar with software operability at scale, nor were given the right training and the tools required to operate their products on their own.

It was very clear to me that throwing people at the problem was not going to solve the problem (the previous leader tried that without much success). The graph below explains why.

Once I started digging deeper into the root cause of the degradation in service quality, it became more and more clear that the SRE teams were spread too thin and trying to do way more than they could reliably handle. Their main function was reliability and monitoring is a key component of it. But instead of focusing on that, they were trying to do way too much. Depending on the team, some were focused on deep product engagement; some on automation; some on being tier 1 for alerts, etc. The pyramid below explains a nice way to look at reliability from a complexity perspective -- from the lowest to the highest. People almost seem to have forgotten that Monitoring was a key part of the job!

I knew this wasn't right, and a more radical approach was necessary to get the SRE team and the reliability back on track. The right thing to do was to drastically reduce the false alarms and not deploy more people to monitor what was essentially noise. I wasn't really sure where to start though. I routinely went to people's desks and ask them about why some alert was noisy and what actions they were taking to reduce false positives. The responses I used to get were not really very encouraging; people were like,

"Can you first ask your team to respond to alerts in a timely manner? We can talk strategy later"

In other words, they were telling me to go back and do my job first. As you can imagine, things were not going too far. I still remember the days when I was so anxious about those dreaded escalation calls because my team did not respond to some critical alert which was buried in a sea of other alerts/noise.

I did not give up. I went around and continued to talk to people; continued to invest and build those relationships. After a bit of doing that, and as people started opening up, I realized that everyone wanted the alert volume to below; it's just that no one knew how to get there. SRE, on the other hand, being a horizontal org, was in a unique position to do so. Then, one day, there was a massive outage that impacted all the teams (The backend was not able to gracefully deal with a broadcast storm in the network that caused it to crash). Then, there were more. During one of the outages, frustrated and tired, I decided that this was it and that it was time to make some dramatic changes. The incremental changes were just not working. That's when I called my Boss and told him,

"This is it; I am going to be making some pretty dramatic changes - we should expect some major shake-up because of this" .

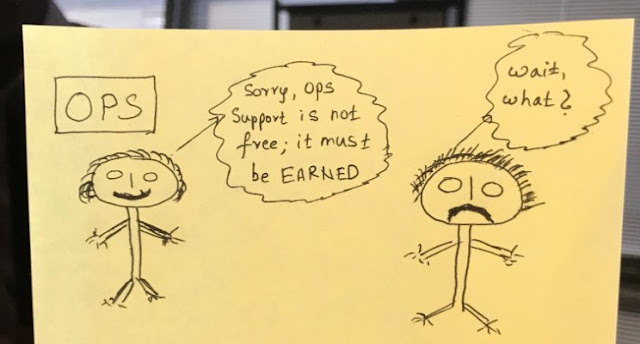

I created a new vision and mission for SRE, with a roadmap, milestones, etc. Communicated it to the whole company; met with key leaders across the company. Got feedback, iterated on it, and then finalized it. I called the initiative "Clean Room". Think of this as a virtual room into all the dev teams can enter and use the services provided in it, i.e. mainly monitoring. The catch is you are only allowed to enter if you are below certain alert budgets in a given time period (mostly a week). Also, once you are in, you must continue to remain "clean". Meaning, stick to your alert budgets for the week. If at any point, you go over alert budgets, you will be kicked out of the "Clean Room", i.e. denied SRE support; you are free to staff up your own SRE teams or have your devs perform that function. What I was really trying to tell the dev teams was that SRE support is not free; it must be earned. And, here's how you can earn it.

The corollary to that is, if you are a dev team, and are living within your means (within your alert budgets, that is), you will be given VIP treatment, i.e. high-quality SRE service with ultra-low response times. The idea behind the initiative (as you can see) was to create the right incentives, so people do the right thing. Creatives incentives to drive the right behavior across the company.

The first time I shared this proposal with a large group of people, the reaction was pretty intense. People (dev teams, mainly) freaked out. They were like,

"There is no way you can do this and deny us service; we pay your salaries"

You can't take it away; what are we going to do? where are we going to find all these new SREs?

"All my Devs are going to quit"

Word spread quickly, and as you can imagine, there were several escalations to my boss; my boss' boss. It wasn't until after several meetings --and with great support from some key leaders at the company-- that most of the teams agreed that something as radical as this (relatively speaking) was the right thing to do long term. They agreed that it is worth trying and potentially a great way to dig ourselves out of this huge hole that we unintentionally created.

My teams' response, on the other hand, was like "what about our job security?". After all, I was proposing that we take some responsibilities away (temporarily) and no one actually likes that. They were initially unhappy that I had asked them to only focus on monitoring. This made most people mad; some threatened to quit. I convinced most to stay. Still lost some. I also closed down our NOC in the Philippines (as they were doing redundant work; the team was given a different role). I urged my team to take baby steps and to focus on one thing (monitoring); execute on that one thing; prove that we can deliver. And that we could then take on more responsibilities like engineering reliability, automation, etc. I also empowered them to say "no"; I told them,

"Say 'No' to things that don't matter, so you can say 'Yes' to things that matter"

I broke down the initiative into three phases: Phase 1 was rolling out the clean room; contract negotiations with each of the dev teams; building the framework that can instrument the alert flow, owners, etc. We also laid some basic ground rules/guidelines: every alert is actionable; every alert needs immediate action; etc. We then gave the teams a few months to do the initial cleanup. The SRE team also were helping with the initial reduction and were fully focused on that. Most also ran daily incident/alert reviews to root cause every alert and get to the bottom of it and make sure it is actionable and stays actionable.

The framework that was used to house the "Clean room" was built with transparency in mind so anybody in the company can track where an alert is, in its lifecycle, who owns it, who is working on it, etc. We also had a weekly meeting with all the key stakeholders to get and give feedback. A weekly metrics report was also sent. The transparency is important because when you expect high standards from someone, be prepared to hold yourself to the same high standards.

We picked a launch date, communicated that date (multiple times) to the whole company. Then we launched. Yeah!. The launch went pretty smoothly for the most part as teams were already mostly prepared. Most teams made it; some teams were still working on cleaning up their alerts. Overall, it was a good launch. And, yes, we did kick some teams out until they figured their s*** out.

An important note about public shaming and peer pressure: (done in moderation) they are great ways to drive progress on large-scale initiatives -- especially that span multiple teams, multiple functions and cross organizational boundaries. After all, no one likes to see big numbers next to his or her name. Once, something, that people are not too excited about (it's not as shiny as that shiny new project everyone wants to work on) becomes a corp initiative, leverage it to drive good behavior and bring about a culture change -- in addition to meeting the quarterly goals (or OKRs). People may resist it initially, but will slowly start to get those dopamine bursts when they realize their products are now better monitored, more reliable, more secure, take user's privacy into account, can ship faster, continually delivered, horizontally scalable, broken down into microservices, etc -- all things that are needed to make sure you can continue to iterate quickly and innovate at scale.

Overall, "Clean room" was a success:

- SRE credibility was (mostly) back;

- More teams wanted to onboard;

In terms of metrics:

- Alert volume dropped by >90%;

- Average SRE response times dropped by 80% to under 5 minutes;

- Unassisted resolution rates increased 600%.

- Perhaps, most importantly, the uptime increased by a whole 9 - and our customers were much happier!

Personally, I was also given the additional responsibility as the "uptime czar" of Zynga.

Key Insights & Lessons Learned

1. Have a strong vision; rally people around your vision

I always wanted to get to a point where:

- All alerts are actionable; all alerts need an immediate response. If something doesn't need immediate attention, it must not be an alert; create a ticket instead.

- All alerts need human intelligence to fix; if a defect can be fixed by executing a playbook, just automate its execution (i.e. auto-remediate error conditions in production). It doesn't make sense to rely on humans to type commands verbatim from a playbook to fix a prod issue.

- Get to no more than 2 Alerts/shift (to keep up with root causing each)

- Page dev teams directly (waking someone up at 3 am is a great way to incentivize dev teams to write reliable software and keep the alert volume low. The corollary to that is that not having to wake up at 3 am is a great incentive for anyone to write good software, to keep the noise/signal ratio low, to keep things clean, etc)

- Devs own their operations; ops move up the stack and focus on infrastructure software engineering, automation, and tool building.

2. Leverage outages; conduct Postmortems (PM).

Always conduct a PM after every outage. Make it part of the culture; establish a blameless postmortem culture. Focus on the problem and lessons learned as opposed to the individual or a team. I like to run them using the "5 whys process". Don't stop at "this was an operator or human error"; drill deeper into what may have caused that operator error: training? not enough safety nets built into the system? other systemic/cultural issues? I religiously conducted PMs during my time at Zynga after every major incident. I also sent weekly reports showing the status of each remediation item, so people knew that I was not just conducting them, but also making sure we closed the loop. In some ways, that helped build my credibility and people were willing to take me and my vision seriously; they were willing to talk to me.

3. Find your allies

There are always going to be some. You just have to find them. During my first few months, I met almost all the studios and the key people in each. To my surprise, I found so many who were (radically) aligned with my vision. Some were skeptical. Some laughed. But, I think that's fine. I persisted and never gave up. In hindsight, those relationships I built early on were critical to the success of "Clean Room". After all, companies are made up of people and it is people who make decisions. Pro tip: if you are trying to push an initiative through with a team and not getting a lot of traction, start reaching out to individuals on that team - you will almost always find people who are aligned with you -- even though the leader of that team may not. It's an effective way to blaze the trail.

4. Get support from Upper Management

This is another area that is important. Do not be shy to meet senior leaders. Most (if not all) will be delighted to meet you. You just have to ask. I met so many at Zynga (and at Yahoo). Don't go into the meeting unprepared; make sure you have your vision ready; be prepared to explain the problem you are trying to solve concisely. Ask for feedback. Ask for support. You will be surprised how much of a difference this makes. Also, try to set up a follow-up meeting asap so you can keep them informed about the progress. When I was launching "Clean room", most upper management already knew who I was and what I was trying to do, and that helped a lot in shipping it despite the hurdles and barriers I faced.

5. Learn to say "No"

One of the hardest things to do as a professional (or otherwise) is saying "no". We tend to overcommit because of the fear of disappointing others; for social cohesion; and a variety of other reasons. We do this as it is easy to take on extra work, but hard to ruthlessly prioritize and respectfully say "no". Overcommitment almost always means we fall short on delivering results. The right thing to do is to (respectfully) say "no" (where it makes sense) -- and explain "why". Also, it's not enough if you --as the leader-- say "no"; you must empower all of your teams to also learn to say "no".

6. Take a stance - Transformational change (at scale) needs a more radical approach

When it comes to making a transformational change at scale: take a stance; have strong opinions. It is better to go all-in on something that you truly believe in; half-assed approaches don't usually work. Remember, maintaining status quo is easy; anyone can do that. Again, you can't do this alone. Your radical approach must be complemented by having a strong vision, strong support from upper management, you and your teams' ability to say "no", your ability to find allies, and the ability to leverage outages and incidents productively.

Closing thoughts

It's all about aligning incentives

Transformational change at massive scale is hard; it really comes to creating the right incentives. After all, we humans, are all about incentives. Heck, even my twins refused to be potty trained until we created the right incentives (praise, more outdoor time, etc). In response to change, the first question we generally tend to ask is,"How will this add value to my team? my company? my community? etc). Aligning incentives is core to changing a culture, and in finding enough people who are going to be guardians of that culture to make it stick.

For example, I am not sure how many of you know this, but, at Google, SRE support is optional. You can't have it until you are a certain scale or complexity and there is a usually a pretty strict on-boarding process based on SLOs (service level objectives). The SRE team can hand the pager back to the dev teams if at any point the dev teams go over their budgets. Seems like a great way to incentivize teams to do build scalable and reliable products given SRE resources are rather scarce and definitely not free (must give up dev headcount to get SRE heads)

Also, before you go back and do anything dramatic at your company, realize that every company and culture is different. Just like there is no single way or general consensus on things like how to ship software, or how big a microservice can get, or if your deployments should be mutable or immutable (btw, mutable deployments are evil, IMO), single vs mono repo, AWS/GCP or in-house, etc. The answers depend on factors like tolerance to risk, the stage of the company, how regulated the industry is, what the stakes of failure are, etc. Personally and philosophically, I am inclined more towards ownership, self-serve (NoOps) and (re)usable tools. But, you know best as to what works for you and your company.

DEV vs OPS - and the paradigm shift.

I'd like to close by trying to surface my perspective on this topic (this topic perhaps warrants an article in itself). I actually feel compelled to write one as I've been an Ops guy for more than a decade and have seen so much change in the way software is operated at scale, or the way operations engineering, as a discipline, is evolving, that it is important to tell you all about it, so you can make better, more informed decisions.

Basically, with the rise of Cloud Computing, (Docker) Containers, Immutable Deployments, Kubernetes, Serverless, Istio, Envoy (for service mesh), Microservices, and the general democratization of distributed systems, the infrastructure space is undergoing a major transformation and a (paradigm) shift. Business logic --by itself-- is no longer the differentiator when it comes to creating value and innovating.

With all the abstractions (most of which leak), the operational complexity in running these distributed applications is also increasing proportionally (especially at scale). This is where, typically, the Ops experts come in and add value. They make the economics of scale work. They are experts in Telemetry, Instrumentation, Monitoring, Tracing, Debuggability -- or, Observability, as they call them these days. It appears that Ops is "moving up the stack". Ops has come a long way: from racking/stacking servers (back in the late nineties) to tweaking the kernel to running packet traces to config management. These days, Ops folks are focusing on building infrastructure and Container Orchestration; they are focused on building observability stacks. Some are also focused on how apps are built, tested, deployed, provisioned (Test Infrastructure and frameworks). Some are more focused on middleware and the edge/ingress. The core software teams building the mobile apps and the business logic actually need the whole ecosystem to be successful and to be able to rapidly innovate at scale.

Given the paradigm shift, it is even more critical that software developers also learn how to operate their complex distributed system at scale; otherwise, they will become irrelevant sooner than later. If you are a dev team and calling a different team each time you are analyzing a distributed trace, or running a packet capture, or debugging a complex production defect; that strategy will not slow you down, it will also dramatically increase your TTR, and thereby frustrate customers and ultimately hurt your business.

In fact, at Yahoo, I was the primary driver behind launching Yahoo's Daily Fantasy back in 2015 in this model (you wrote it; you own/run it). This was a radical departure on how software was traditionally operated at scale. The model was simple: the dev team was responsible for almost all aspects of the development lifecycle: from design to writing code to writing tests to going through multiple architecture senior tech council discussions to paranoids' security reviews. They were also responsible for testing and deploying the code they wrote (using CD). And, finally, operate it and scale it. So, things like monitoring, on call, capacity, postmortems, etc. They are their own Ops.

The Ops teams, on the other hand, helped with the product launch in areas like documentation, understanding of things like DNS, CDN, infrastructure provisioning, monitoring, load balancing. Also, in the understanding of how all the components are interconnected in a complex distributed system. Writing playbooks, how software is deployed in a continuous fashion multiple times a day, how to roll back, etc. But the ops team was mostly hands-off after that. They still provide consulting and expert services periodically and are the go-to POC for things like tracing, monitoring, on call, capacity, infrastructure, etc.

The beauty of such a model is that it encourages ownership, holds people accountable and has led to great positive outcomes. Engineers on that team really care about customers. Just to give an example, there was once an alert when one underage customer was trying to register to play daily fantasy; immediately, an alert triggered (an HTTP 4XX) that went directly to the dev on call, who immediately responded and verified that the system did what it was supposed to do (i.e. did not allow the person to register), then created a ticket to follow up to make the alert more actionable (do not need to wake someone up at 3 am if the alert is not actionable) and went back to bed. Awesome, right?

Anyway, if you have come this far, you have done great. Good job! It means you are serious about bringing about a transformation change at scale. Good luck!

***If you found this article useful, leave a comment or like it - goes a long way in motivating people to produce more. Thank you!

References:

ReplyDeleteits very nice to read your blog and im really appreciate to read that.thanks to you for giving wonderfull ideas.thankyou.

DevOps certification in Chennai

DevOps Training in Chennai

DevOps course in Chennai

Data science course in chennai

AWS training in chennai

AWS certification in chennai

This comment has been removed by the author.

ReplyDeleteYahoo daily fantasy is a platform set up by Yahoo, which allows you to put in your sports knowledge and win money at the same time. There are different types of fantasy, but this platform will allow claiming your victory over your opponents in a league that you don’t have to wait till the end of the season.https://mediavibestv.com/yahoo-daily-fantasy-yahoo-daily-fantasy-sports-yahoo-cricket-fantasy/

ReplyDeleteYahoo daily fantasy is a platform set up by Yahoo, which allows you to put in your sports knowledge and win money at the same time. There are different types of fantasy, but this platform will allow claiming your victory over your opponents in a league that you don’t have to wait till the end of the season.

ReplyDeletehttps://mediavibestv.com/yahoo-daily-fantasy-yahoo-daily-fantasy-sports-yahoo-cricket-fantasy/

beğeni satın al

ReplyDeletemmorpg oyunlar

ReplyDeleteINSTAGRAM TAKİPÇİ SATIN AL

Tiktok jeton hilesi

tiktok jeton hilesi

Antalya Saç Ekimi

İnstagram takipçi satın al

ınstagram takipçi

MT2 PVP

INSTAGRAM TAKİPCİ SATIN AL

Smm Panel

ReplyDeleteSmm panel

iş ilanları

instagram takipçi satın al

hırdavatçı burada

beyazesyateknikservisi.com.tr

servis

Tiktok Para Hilesi İndir